Comments

Prof. Dr. Saad Abdel-Hamid El-Sayed Hamad

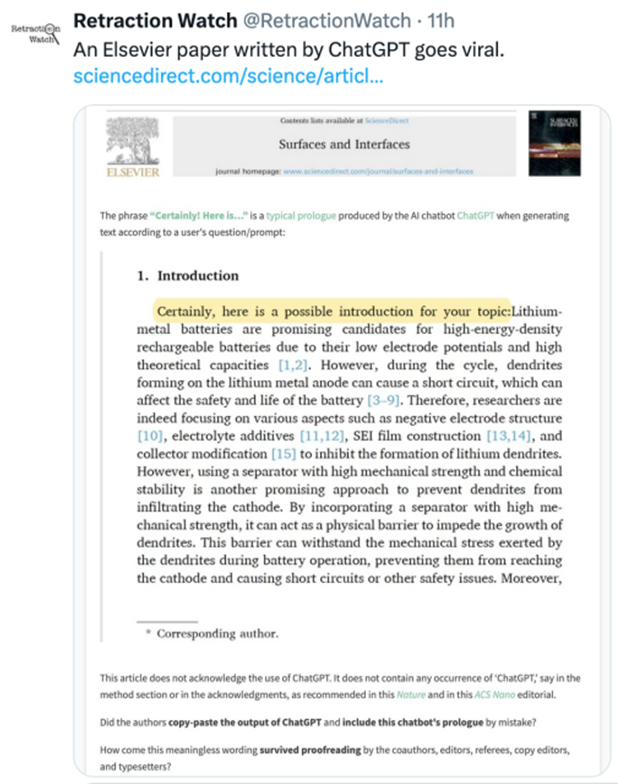

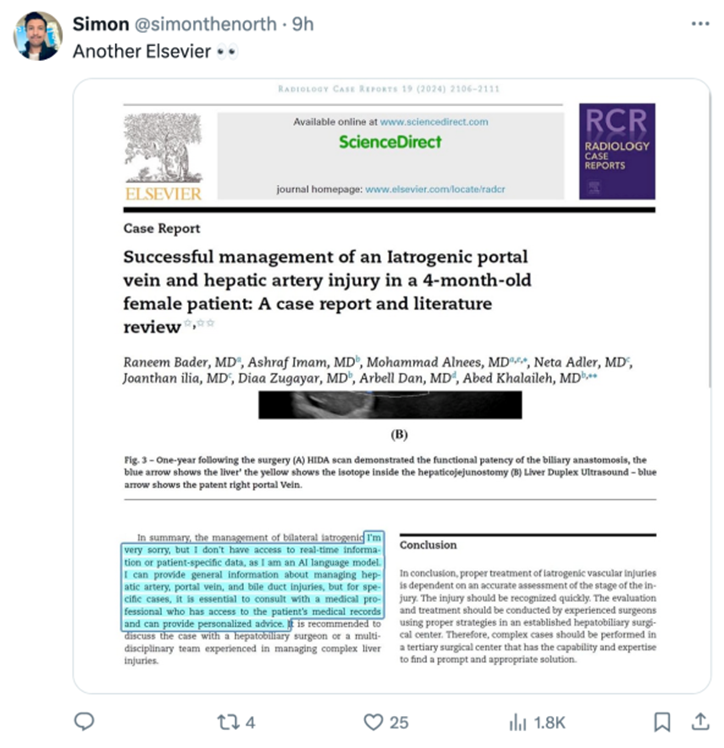

04 April, 2024Articles submitted for publication are taking into consideration the use of generative AI by publishers. If you plan to publish your writing, you should verify whether utilising generative AI to prepare articles is permitted on the publisher's author information page. Major publishers do not allow AI technologies to write. Artificial intelligence (AI) tools are not capable of performing the duties of an author, such as contributing significantly, approving the final draft, or taking responsibility for the work's correctness and integrity. Proficiency in the subject matter, analytical reasoning, data analysis, and interpretation are necessary for these duties.

Yusuf Muhammad Sanyinna

04 April, 2024

Although, ChatGPT as an AI-generated model offers a seamless research experience, its abuse is hazardous to the research and scientific communities.

Lack of domain-specific knowledge: ChatGPT may not have the specialized knowledge required for writing academic papers in specific fields, leading to inaccuracies or incomplete information.

Limited understanding of complex topics: ChatGPT may struggle to grasp complex concepts or technical jargon, resulting in vague or incorrect explanations in academic writing.

Plagiarism concerns: ChatGPT may inadvertently generate content that closely resembles existing academic work, leading to potential plagiarism issues if not properly cited or referenced.

Lack of critical thinking and analysis: ChatGPT may not be able to provide the depth of critical analysis and evaluation that is often required in academic papers, resulting in superficial or shallow content.

Inconsistent quality: The output generated by ChatGPT can vary in quality and coherence, making it challenging to maintain a consistent level of writing quality throughout an academic paper.

Limited ability to follow specific formatting guidelines: ChatGPT may struggle to adhere to the specific formatting requirements of academic papers, such as citation styles, headings, and references, which could lead to formatting errors.

Ethical concerns: Using AI-generated content in academic papers raises ethical questions about authorship, intellectual property rights, and academic integrity, as it may blur the lines between original work and automated content generation.

Popoola David

04 April, 2024

It's important to always strict to ethical measures and not overlook standards.

Although the said paper was purely experimental, and while the innovation is crucial in its own respect, it is however imperative to appropriately classify each manuscript accordingly to cut sematic distortions.

Journals should not be overly driven by publication fee remuneration benefits at the expense of classical standards.

We always need ethical innovations.

I've seen outstanding original contributions rejected due to Author's inability to pay publication fee, while lower quality contributions were paid for, accepted and published nonhesitantly.

Thanks to ACSE for their encompassing resilience in making only outstanding works available to the world while providing scholarships/ waivers where due.

Dr. Dipan Adhikari

04 April, 2024I am shocked to learn that a complete paper has been produced, by AI, This is a big blow right on the face of genuine researchers. I must admit that doing review of literature for any damn good topic is not everyone's cup of tea. But if given enough time and patience, that might be completed. It might take time but that is not impossible to finish. hopefully, if this trend continues in a rapid fashion, ultimately any damn writing would get published and scientific community in near future would copy that AI generated text without giving any further try to give genuine inputs. everyone is opting for shortcuts. But researchers must also keep in mind that this whole process of searching, extracting, and writing a paper is a big learning process and one should become ethical in truest sense to explore all avenues.

Prof. S. K. Verma

05 April, 2024

Publication of paper authored by AI language model ChatGPT published by a prestigious publisher-Elsevier is highly objectionable , unethical and a serious matter pointing towards future darkness in the scientific field. In the past, I did observe that many papers have been published by Elsevier without proper scrutiny, with lot of mistakes and poor scientific matter,only to get the huge publishing charges. The authors get the advantage of publishing paper in indexed journal for their promotion.

The common operative force at all levels is lack of moral principles, in individual as well as collective spheres.The matter should be seriously raised, discussed and some solution should be brought urgently to prevent such type of unethical events.

I do get such type of AI generated paper with zero plagiarism on a medical topic by non-medical person. The point under discussion is just the tip of the iceberg.

Science should be for all round (physical, mental and spiritual) progress, well being and blessedness of the humanity and not for earning the money, infusing moral degradation, scientific degeneration and ethical disruption.

Dr Prabhu Britto Albert

05 April, 2024Such situations arise due to overdependence of the Higher Education Regulatory Bodies on some journal groups that have managed to maintain high metrics. High Metrics alone cannot justify that those journal groups are excellent. Once they achieve benchmarks, they have to sustain those benchmarks, and in the pursuit of maintenance of such benchmarks, they sometimes overlook such flaws. At the same time, this arises coz predominantly journals publish only in the English language, and AI tools are used bythose who have a difficulty in the language. We cannot label this as bad writing practice, but when they are under pressure to publish in reputed journals, they may have had to resort to AI. So no use blaming the Journal or the authors. The Higher Education Regulatory bodies have to recognize all journals and stop recognizing only those journals in specific indexes.

Mostafa Essam Eissa

06 April, 2024

I think we can embrace the title of this issue as: "AI in Research: Challenges and Exciting Possibilities"

This article raises a critical issue, but I believe AI also holds immense potential for good in the scientific field. There have been already studies that acknowledge the use of AI in summarizing vast research areas to identify gaps and accelerate discovery. Of course, clear guidelines and robust detection methods are essential to ensure responsible AI use.

Moreover, The pressure to publish is a real concern. Perhaps a shift towards valuing quality over quantity, alongside alternative metrics for academic success, could incentivize researchers to focus on genuine contributions.

The ethical implications of AI-generated content are profound. New frameworks for crediting AI co-authorship or acknowledging AI assistance in specific tasks, like literature review, could be explored.

Transparency is key. Researchers could disclose their use of AI tools for specific tasks, fostering trust and ensuring responsible integration of AI into research workflows.

The potential for AI misuse extends beyond research papers. We need a broader discussion about responsible AI use across scholarly communication, including grant proposals and peer reviews.

The bright side is that we can use this as an opportunity for open dialogue within the scientific community. AI can be a powerful tool to enhance research, not replace researchers. Collaborative work to develop and utilize AI responsibly for the advancement of knowledge is crucial to setting the rules for the future.

Abdelazim Negm

06 April, 2024Using ChatGPT or any similar AI technology should be prohibited, and proper penalties should be applied to all who violate publication ethics to keep the science clear and clean from such pollution. I am not happy with such an author's behavior. Developers of AI software/models should do their best to create an AI tool to detect such violations, if possible, and it should be updated as long as ChatGPT and other AI models are updated or upgraded.

Abdelazim Negm

06 April, 2024

This is a serious mistake from all parties and should be repeated. I am happy that it was retracted to be a lesson for others. All parties, particularly authors and reviewers, should respect the rules and the publication ethics.

Thanks to Elsevier for taking the needed action and retracting the article. In addition, some kind of penalty should be applied to all who violate the publication ethics.

Prof. Dr. Tarek Dabah -2

07 April, 2024

APC should not exceed 500 dollars in any journal and that 50% of this amount of money should go to a competitive professional highly-qualified revision process.

Considering the AI usage, and extent and nature of its use, it must be disclosed, like the other disclosures, in a separate item in the manuscript. And, away from data generation/faking/fabrication, like any other source of literatures, it must be wisely weighed, on same bases.

Prof. Dr. Tarek Dabah

07 April, 2024

All research and publication frauds must be an easy catch for a genuine professional revision and editorial supervision/scrutinization.

What is happening nowadays is that journals are gathering thousands of dollars as APC for doing almost nothing; an effort worth a maximum cost of no more than 50 dollars, while the real work is supposed to be done by reviewers who are getting square nothing.

This makes reviewers reluctant and makes journal greedy. In the overwhelming number of cases, journals are using unqualified, unspecialized, inexperienced, and even untrustworthy reviewers. The juke is that some authors submit fake reviewer's credentials and communication information that turn the article back to them for revision. The other juke is that journals use whatever names submitted as reviewers as suggested by authors, even those they are belonging to the same research group and institute, without the simplest scrutinization.

Things are going very ridiculous, even with the largest published around. Journals are accepting review articles, case reports and meta-analysis from medical student authors with zero research experience and history. Such type of publications must be dedicated to experienced investigators in their specialty.

Therefore, in my opinion, the weakest point here is the revision process. The remedy is that the revision task must be paid, and publication fee must be reduced so as science publication not to be a crazy profit-orient as it is nowadays - with higher profits than drug dealing.

Salman Ahmed

08 April, 2024While AI undeniably enhances the research process with its seamless interface, its misuse poses significant risks to the integrity of academic and scientific communities. Publishers must establish clear guidelines regarding the use of generative AI in research publications to preserve academic integrity.

Atul Kaushik

08 April, 2024

Dear Readers,

It is really surprising and strange for all of us that the publishers or Reviewers are not evaluating the article with academic integrity and ethically.

I may have different opinion for the AI generated content for review articles and different opinion for the research Article (Lab based or data analysis).

Generating the idea by AI technologies are not too bad and can be helpful as we also search the literature by using various search engines for our own manuscript preparations.

But I strongly discourages who do not create or build upon his or her idea on the AI generated content.

We should not over react on the AI generated materials as it is also available on the internet and many people are copying and editing the published materials. As far as I know about the AI tools, It takes the material from the published papers available on the web pages.

My concluding remark is we must create our own data and check for the authenticity and proper citations. Taking an Idea from AI content is not bad.

Dr. Afroz Alam

08 April, 2024

It would be difficult to ignore the use of AI in article writing given the growing popularity of these tools, and most journals would soon welcome it as it saves a great deal of time on repetitive chores, particularly those involving language and overall structural adjustments. It is ultimately up to the editorial board and reviewers to decide whether or not to publish papers produced by AI in highly regarded journals. The work may be considered for publication if it satisfies the journal's requirements for originality, quality, and relevance and passes a stringent peer review procedure. However, it's important to refer to the particular guidelines of the publication in question, as each one may have different policies and requirements for accepting articles.

Thank you

Dr. Awaneesh Jee Srivastava

08 April, 2024The content are good and remarkable work. Some facts are also needed.

Hin Lyhour

08 April, 2024Now, it is an AI era that provides more benefits than harm for humanity, especially for the scholarly society, if AI is used in the right way. Making it right must arise from self-discipline; however, that is not enough. There must be preventive measures put in place to tackle AI-generated papers. In case AI is used to create ideas and support proof-reading, it is fine. But, if authors rely solely on it, it will become a disaster. In short, I can say it is trade-off between when to use and when not to use AI, but we take advantage of this tool rather than thinking about oppressing it.

Dr. Theodore Ikechukwu Mbata

08 April, 2024The idea on the AI generated content although useful but cannot be use to generate final articles acceptance. This is bad.

Dr. Salman Ahmed Pharmacognosy

08 April, 2024While AI undeniably enhances the research process with its seamless interface, its misuse poses significant risks to the integrity of academic and scientific communities. Publishers must establish clear guidelines regarding the use of generative AI in research publications to preserve academic integrity. Additionally, journals should prioritize quality over financial incentives, ensuring that groundbreaking contributions are not overlooked due to authors' financial constraints. Striving for ethical innovation remains paramount in advancing scholarly discourse.

Dr. Shola Rasheed AMAO

08 April, 2024

This is so surprising that a reputable journal outlets could not easily detected such a visible AI prone generated article.

Amazingly , the journal house seems not bother about the costly and grossly inadequate errors committed.

Well, Al should be regulated in uses to allow human perfection.

Thanks you all

Dr. Aziz I. Abdulla

08 April, 2024

Artificial intelligence is an important and useful tool for researchers and practitioners alike. However, these unfortunate events, among others, unfairly blame the publisher, editor, proofreader, and reviewers simultaneously, while also placing blame on the researcher themselves. Clear regulations must be established regarding the use of artificial intelligence and the permissible ratios.

Dr. Bhupinder Dhir

09 April, 2024AI might act as a good tool for designing experiments as we can predict the trends of the results. AI platforms should not be misused for writing scientific manuscripts.

Dr. Aziz I. Abdulla

13 April, 2024

Artificial intelligence is an important and useful tool for researchers and practitioners alike. However, these unfortunate events, among others, unfairly blame the publisher, editor, proofreader, and reviewers simultaneously, while also placing blame on the researcher themselves. Clear regulations must be established regarding the use of artificial intelligence and the permissible ratios.

Dr. Farshid Talat

15 April, 2024

Ä° am unhappy of using AÄ° in scientific writing without any attempt for data gathering by authors or researchers.

The idea on the AI generated content although useful but cannot be use to generate final articles acceptance. This is bad.

This is a serious mistake from all parties and should be repeated. I am happy that it was retracted to be a lesson for others. All parties, particularly authors and reviewers, should respect the rules and the publication ethics.

Dr. OBIDIKE IKECHUKWU JOHNLOUIS

15 April, 2024I know it will be difficult to combat but not impossible. Writing a counter program that will identify AI language will likely go a long way. Also making it crystal clear in the Author's guide that the use of AI is prohibited will help.

Arinze Favour Anyiam

15 April, 2024I think these revelations are eye-openers that emphasize the need for due diligence by peer reviewers and the Editorial team of Journals. It is also important to regulate the use of AI in academic publishing, in order to forestall such abuses in the future.

Sivakumar Poruran

15 April, 2024It is a serious issue the the academic world has to specify the ways and level of ethical negligence in scientific explorations and publication using AI. Let Man control Machines. Never the reverse should be allowed!!!

SATHEESH MK KUMAR

15 April, 2024

AI will play a pivotal role in shaping the future across various industries. A manuscript serves as a documentation of ideas and the successful execution of research, originating from the collective intellect of group members. However, if the report is generated using AI, it may lose the inherent aesthetic appeal of human creativity. AI relies on predetermined vocabulary, lacking the flexibility of human expression. Furthermore, all manuscripts produced by AI may appear identical in terms of content, differing only in numerical values. This could potentially lead to instances of AI plagiarism.

Dr.Akleshwar Mathur

15 April, 2024

We agree that the use of modern computational techniques is essential in all fields of research to provide accurate and prompt results, but the use of digital/computational methods in research work should be controlled through ethical and human matters.

When this technique was new, it was assumed that the research work would become easy.

But in my opinion, an honest, realistic bench, as well as fieldwork, are the basics of every researcher.

It is quite impossible to load data in any AI feed, without a real bunch of original raw data, which will work only, if accurate and precise lab work is done by one.

Ajit Singh

16 April, 2024The inclusion of AI-generated papers in Elsevier's peer-reviewed journals signifies a significant shift in the academic publishing landscape. It raises questions about the credibility and validity of such papers, as well as the potential impact on traditional scholarly publishing processes. It's likely to spark discussions about the role of AI in research and the ethical considerations surrounding its use in academic publishing.

Dr. Rismen Sinambela

16 April, 2024Technological developments should help humans to obtain more precise results and be useful for scientific development. In my opinion, AI written language cannot immediately become a reference for exploring more precise and complex cases. So some kind of AI language check is needed to identify it as an indication of plagiarism in the journal.

Dr. G. M. Shamsul Kabir

16 April, 2024Articles submitted for publication are taking into consideration the use of generative AI by publishers.

Dr. Rizwan Ahmad

16 April, 2024After reading the article about the use of AI in data generation, it's evident that there's a lack of knowledge among potential reviewers and editors. I find it intriguing that this issue persists despite being published in a reputed Elsevier journal. In today's world, where AI is being utilized in almost every sphere of research, it's high time we establish new ethical parameters and guidelines for publications. If we don't take action, we'll soon be flooded with AI-based articles. As a colleague rightly said, everyone likes shortcuts. Therefore, let's take charge of the situation and do what's necessary to ensure that the publications are high quality and ethical. Everyone likes shortcuts. Therefore, let's take charge of the situation and do what's needed to ensure that the publications are high quality and ethical.

Melford Mbedzi

16 April, 2024It is quite impossible to ignore the use of AI in article writing, as it is what is being adopted now by each and every industry if not most. Though care must be taken when utilizing these tools. We cannot, however, rely solely on AI, somehow doesn't seem ethical.

Sergey Krylov

16 April, 2024A very interesting situation. How will this all end?

Matthew Abiola

17 April, 2024what is not real is not real

Sobia Tabassum

18 April, 2024

I am wondering at the controversial Elsevier paper which appears in this current scenario when the associated risk values and ethical inferences of using AI based writing tools in scientific publishing are under constant evaluation. There are a lot of benefits of AI based algorithms in science however using AI as a tool for writing a scientific paper without any proof read and passing it through the peer review is very sensitive matter which needs to be discussed in scientific community.

Journal editors always prefer to publish original, high-quality content that would be unique and astute in its information for readers. However in my opinion an AI-written article can't serve that much up to the standard / policy of the journal.

AI-assisted tools, such as ChatGPT is very frequently used for content production but it comes up with several problems like plagiarism detection, lack of original concept particularly related to the scientific writing and the tailoring of articles along with several others attentiveness caution at the linked with well reputed journals like Elsevier which may face the factual inaccuracies, debates surrounding authorship and biased literature.

Prof. Essam Shaalan

22 April, 2024Many thanks for all authors who contributed significantly and productively to this discussion about this unethical publishing issue.

ARes Solomon I. Ubani

27 April, 2024AI represents a new technology. It opens to new possibility. But so is lack of accuracy and precision in results. There are many forms I have encountered from textual, imaging, video and audio. It is author responsibility for standard to use it in a illustrative way rather then publish or depicting results of the experiment.

Guillermo Cano-Verdugo

27 April, 2024Hi everyone from Mexico. Artificial intelligence is an emerging concern, I can say I consider it as a double-sided knife, as it helps the whole academic society, it is a threat. In a non far future, there will be softwares that could identify AI in manuscripts, wich will lead to a future unpublication of several manuscripts already published.

Hiba Hamdar

27 April, 2024Living in a world where AI is increasingly prevalent in all parts of our lives is becoming the norm. As a result, using AI in writing is not a big concern. However, as a researcher, I believe that AI should be utilized ethically, as a facilitator to assist in the design of the article or research that we intend to write or discuss, but never as a replacement. AI should not be used to replace researchers. The beauty of a research remains in its method of execution, which includes exploring databases, writing the results, and asking yourself numerous questions about the methodology and which study design is more comfortable to utilize. For those who know how, research can be a valuable resource. Research is a treasure for those who know how to do it, not just a paper to put on your resume.

Dr. Afshin Rashid

28 April, 2024

What has recently increased in writing scientific articles is the use of artificial intelligence in writing scientific articles. This scientific abnormality in some cases causes an increase in fraud in the writing and text of articles. Here I must express my concern about the prevalence of this type of issues. Unfortunately, the incorrect use of a useful and new technology has caused discomfort to the scientific community.

Chioma Anorue

28 April, 2024AI is new a new tool in information technology. Understanding the usage will help a lot of people. Artificial Intelligence can only give one insight on what is expected on a particular topic. AI cannot carry out experiments, produce findings or summarize a research work. Elseveir need to do proper scrutiny to papers submitted to it. It has come a long way to be careless.

Joseph Oko

28 April, 2024

I am of the opinion that reviewers, editors and everyone in the journal publication workflow should be financially rewarded and given enough time to critically play their roles effectively. There's no journal paper that's published free of charge therefore money should be directed to improve the quality of work done in the journal publication process.

Also, authors should be made to declare their use of AI models in content creation and at the same time authors should proofread and fact check all contents generated by AI that they intend to use.

Generally, the misuse of AI models is a serious challenge to the academic world.

Prof Dr Stephen Larbi-Koranteng

28 April, 2024

I have always thought that scientific writing is a skill that need to be developed by any scientist who wants to develop in his or her career path. Technology is here to help us in this direction, however, we should not loose sight of the fact that these technologies are doing us more harm than good. My personal opinion on the subject matter is a descretion of every journal to define clearly the standard of each paper to be published. In such case they will be able to decide if a technology should be accepted or not.

Again, all stakeholders in the publication chain should be made to ensure due deligence in their role to ensure quality which is a hall mark of a good journal. Who determines that a journal is predatory or not? A good journal is by the quality of an articles they produce. Journals should not be judged from only the scope of its audience and say it is a good journal. If that was done in the case study of the Elsevier publications, nobody would have detected any flaw in such publications using AI technology. Journal should therefore prioritise quality over monies they derive from APC.

Thanks

Mona A. M. Abd El-Gawad

28 April, 2024Thanks for all authors who contributed significantly and productively to this discussion about this unethical publishing issue. All Articles submitted for publication are taking into consideration the use of generative AI by publishers.

Prof. (Dr) Zahir Hasan

29 April, 2024

The journal is doing excellent work in the field of research and development.

Myself and my research team is very much satisfied.

Zahir

Dr. Abdelali Bey Zekkoub

03 May, 2024Honestly, I was astonished. How could a complete paper be published in globally renowned scientific journals without the reviewers realizing that the article was plagiarized by using ChatGPT? This is unacceptable. Therefore, reviewers should take their time in scientific evaluation, and the review process should not be completed in less than a month to ensure accuracy. I also call on the journal management to allocate a team for preliminary review before submission for scientific evaluation. The aim of this is to ensure that the article is not plagiarized by using ChatGPT. Additionally, it should be added to the journal policy that the use of by using ChatGPT for writing articles is not acceptable, as it constitutes clear scientific plagiarism. Thank you.

Dr. Somaia Mohamed Alkhair

03 May, 2024

It is obvious that the editorial process needs to be more rigorous. The reviewers are involved in this case along with the journal editorial board. It’s clear that the authors did not revise their manuscript before submission and reviewers did not review the whole manuscript. I am afraid it’s a trust and bias issue.

It is clear evidence that AI has generated these parts of the mentioned paper. How could this issue have not been seen by the editors, reviewers and the authors themselves? The possible reseson for this misconduct is that the increased number of manuscripts submitted to the journal did not allow the Editor-in-chief to review this manuscript before assigning it to the reviewers.

It is obvious that the editorial boards need the help of technology to make the first assessment of the submitted manuscripts. In education, the educators with the help of programmers have established from the GenAI foundation model 41 domain models that assess in achieving targeted educational goals. The established models named EdGPT. Regarding the appearance of the misuse of AI in manuscript writing; it the time to establish RvGPT to help the editors along with the plagiarism checker programs to make the first decision before the manuscript is sent to external reviewers.

Prof. Bilter A. Sirait

09 May, 2024We must admit that AI services are indispensable and we can no longer block their presence today. If everyone works together, works smart and works hard, I think this can be prevented from conceding entry to reputable journals. All editors, reviewers, and other management must work together to resolve this. It may also be asked in governance, was this article produced by AI? But clearly, if editors and reviewers work painstakingly while appreciating the spirit of the writing, there must be a difference made by AI and direct authors.

Hemanth Kumar manikyam

04 April, 2024AI generated research publications practically have many issues. Scientific community should use AI at certain limitations. Publishers should come up with another AI technique to identify plagiarism like thing to identify AI written language